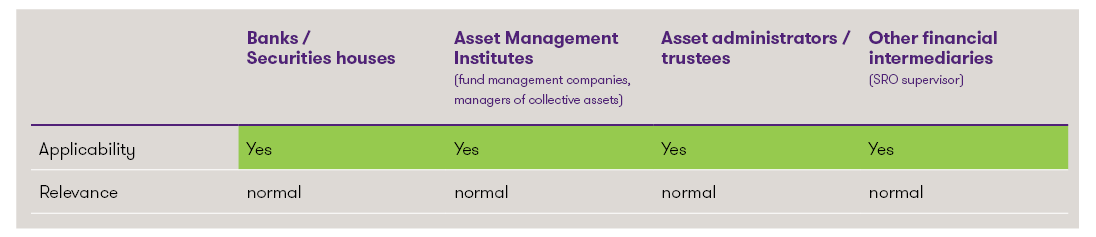

Classification1

1This is a highly simplified presentation, which should enable a quick initial classification of the topic. Each institution should determine the relevance and the concrete need for action individually.

Artificial intelligence in the Swiss financial market

In Switzerland, the Federal Council decided on 22 November 2023 to create the basis for future AI regulation. The Federal Department of the Environment, Transport, Energy and Communications (DETEC) was tasked with identifying possible approaches to regulating AI in Switzerland by the end of 2024, whereby these should be compatible with regulatory developments in the EU.

The importance of AI has risen sharply in recent times, particularly in the financial industry. The range of applications for AI in the financial services sector is huge. In its Risk Monitor 2023, FINMA identified AI as a long-term trend but also as a strategic risk. It has announced that it will review the use of AI at supervised institutions and closely monitor further developments in Switzerland and abroad. Specifically, the following four expectations were formulated for financial service providers when using AI:

- Governance and accountability: Clear roles and responsibilities as well as risk management processes must be defined. Responsibility for decisions cannot be delegated to AI or third parties. All parties involved must have sufficient expertise in the field of AI.

- Robustness and reliability: When developing, customising and using AI, it must be ensured that the results are accurate, robust and reliable.

- Transparency and explainability: The explainability of the results of an AI application and the transparency of its use must be ensured depending on the recipient, relevance and process integration.

- Equal treatment: Unjustifiable unequal treatment of different groups of people should be avoided.

EU regulation on artificial intelligence (AI Regulation)

With the political compromise reached by the European Commission, the Council of the European Union and the European Parliament on 8 December 2023, the EU took a major step towards AI legislation. To define an AI system, EU legislators are drawing on the work of the OECD, although the final version of the AI Regulation remains to be seen for the exact definition. The OECD defines an AI system as a "machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments".

The AI Regulation will contain a risk-based classification of the various AI systems based on the potential risks to society and fundamental rights. A distinction is made between the following risk levels:

- Systems with an unacceptable risk

Systems that violate fundamental rights pose an unacceptable risk and are therefore banned. These include, for example, state social scoring systems or real-time biometric identification systems in public spaces.

- Systems with high risk

Systems where there is a significant risk that they could jeopardise the security and fundamental rights of individuals. This category includes systems that are used in sensitive areas such as critical infrastructure or education. These systems are subject to strict requirements (conformity assessment, examination of the impairment of fundamental rights, etc.).

- Systems with limited risk

Systems with only limited risks are subject to certain disclosure obligations. This category includes "chatbots" or "deepfakes", for example. These systems require transparent information to be provided to users that they are interacting with an AI system. - Systems with no or minimal risk

Systems that pose no or only minimal risks to society, such as AI-controlled spam filters. Most AI systems should fall into this category. Although no specific obligations are introduced for these systems, providers can voluntarily comply with a code of conduct.

In addition to these 4 risk categories, the AI Regulation introduces specific documentation and transparency obligations for general purpose AI (GPAI) models. In order to standardise the assessment of compliance with various aspects of the AI Regulation, it is envisaged that compliance will be presumed if harmonised technical standards are met. The European Commission has already commissioned the development of such standards by 2025.

Similar to data protection law, there will be heavy fines for breaches of AI regulations, which can amount to up to EUR 35 million or 7% of annual global turnover, depending on the severity and type of offence. Implementation work is underway, and the final version of the AI Regulation is expected in Q1 2024. The decree is expected to enter into force two years after adoption.

Due to its extraterritorial scope, the AI Regulation will also apply to Swiss companies i) that place an AI system on the market in the EU, ii) whose AI system is used in the EU, or iii) where the output of the AI system has an impact on people in the EU by affecting them through the use of the AI system.

Conclusion

Swiss financial service providers that use or intend to use AI systems in their products and services should familiarise themselves with FINMA's expectations in this regard and with the final version of the EU's AI Regulation and carefully review the extent to which the relevant provisions apply and how they can be implemented appropriately.

Do you have questions about AI regulation? Our specialists from the Regulatory & Compliance FS team will be happy to support you. We look forward to hearing from you.